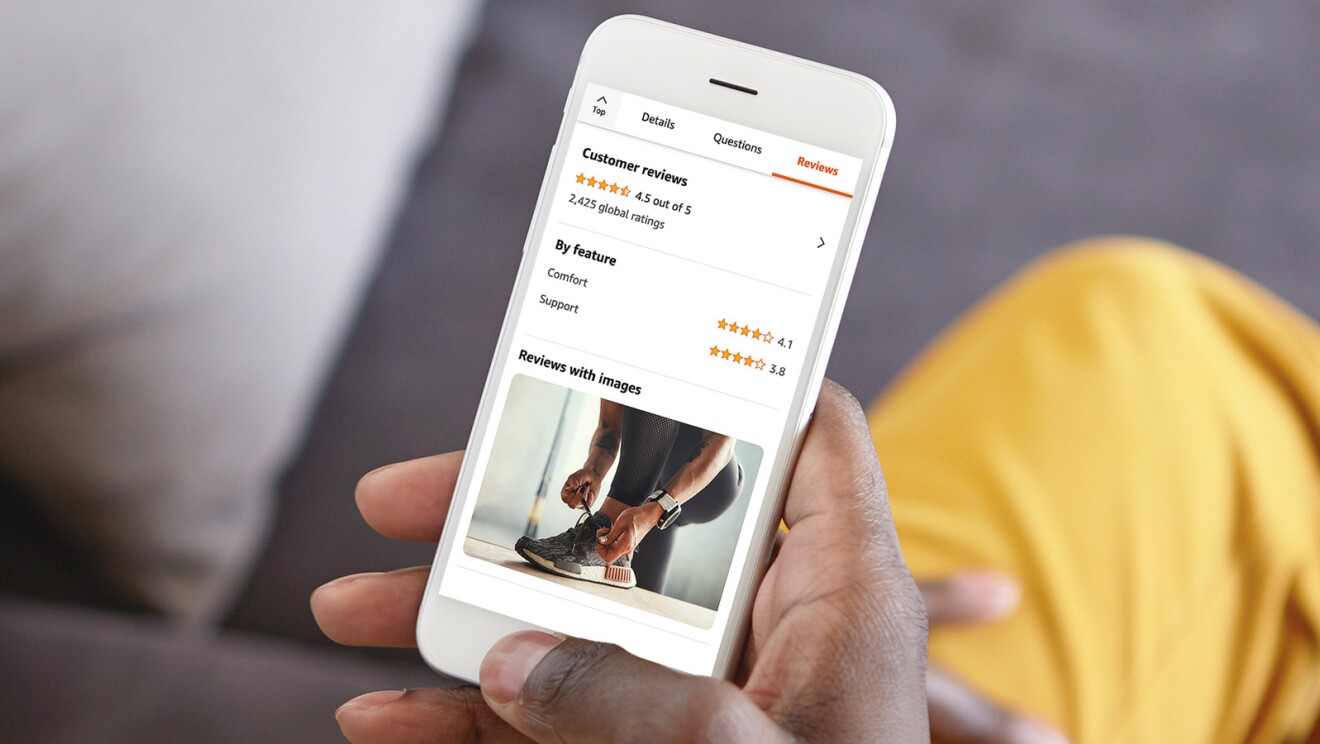

Customer reviews have been a core part of why customers love shopping in Amazon’s stores ever since the company opened in 1995. Amazon makes sure that it’s easy for customers to leave honest reviews to help inform the purchase decisions of millions of other customers around the world. At the same time, the company makes it hard for bad actors to take advantage of Amazon’s trusted shopping experience.

So, what happens when a customer submits a review? Before being published online, Amazon uses artificial intelligence (AI) to analyse the review for known indicators that the review is fake. The vast majority of reviews pass Amazon’s high bar for authenticity and get posted right away. However, if potential review abuse is detected, there are several paths the company takes. If Amazon is confident the review is fake, we move quickly to block or remove the review and take further action when necessary, including revoking a customer’s review permissions, blocking bad actor accounts, and even litigating against the parties involved. If a review is suspicious but additional evidence is needed, Amazon’s expert investigators who are specially trained to identify abusive behaviour look for other signals before taking action. In fact, in 2022, we observed and proactively blocked more than 200 million suspected fake reviews in our stores worldwide.

“Fake reviews intentionally mislead customers by providing information that is not impartial, authentic, or intended for that product or service,” said Josh Meek, senior data science manager on Amazon’s Fraud Abuse and Prevention team. “Not only do millions of customers count on the authenticity of reviews on Amazon for purchase decisions, but millions of brands and businesses count on us to accurately identify fake reviews and stop them from ever reaching their customers. We work hard to responsibly monitor and enforce our policies to ensure reviews reflect the views of real customers, and protect honest sellers who rely on us to get it right."

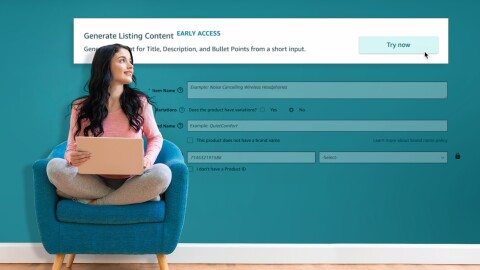

Among other measures, Amazon uses the latest advancements in AI to stop hundreds of millions of suspected fake online reviews, manipulated ratings, fake customer accounts, and other abuses before customers see them. Machine learning models analyse a multitude of proprietary data including whether the seller has invested in ads (which may be driving additional reviews), customer-submitted reports of abuse, risky behavioural patterns, review history, and more. Large language models are used alongside natural language processing techniques to analyse anomalies in this data that might indicate that a review is fake or incentivised with a gift card, free product, or some other form of reimbursement. Amazon also uses deep graph neural networks to analyse and understand complex relationships and behaviour patterns to help detect and remove groups of bad actors or point towards suspicious activity for investigation.

“The difference between an authentic and fake review is not always clear for someone outside of Amazon to spot,” Meek said. “For example, a product might accumulate reviews quickly because a seller invested in advertising or is offering a great product at the right price. Or, a customer may think a review is fake because it includes poor grammar.”

This is where some of our critics get fake review detection wrong—they have to make big assumptions without having access to data signals that indicate patterns of abuse. The combination of advanced technology and proprietary data helps Amazon identify fake reviews more accurately by going beyond the surface level indicators of abuse to identify deeper relationships between bad actors.

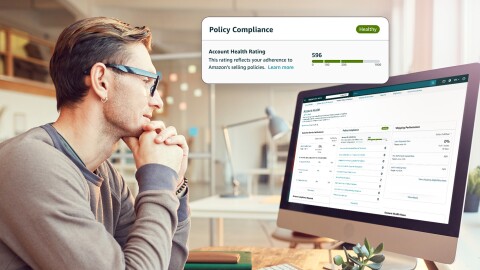

“Maintaining a trustworthy shopping experience is our top priority,” said Rebecca Mond, head of External Relations, Trustworthy Reviews at Amazon. “We continue to invent new ways to improve and stop fake reviews from entering our store and protect our customers so they can shop with confidence.”

Learn more about Amazon’s efforts to combat fake reviews.